Use context: kubectl config use-context k8s-c2-AC

Ssh into the controlplane node with ssh cluster2-controlplane1. Temporarily stop the kube-scheduler, this means in a way that you can start it again afterwards.

Create a single Pod named manual-schedule of image httpd:2.4-alpine, confirm it's created but not scheduled on any node.

Now you're the scheduler and have all its power, manually schedule that Pod on node cluster2-controlplane1. Make sure it's running.

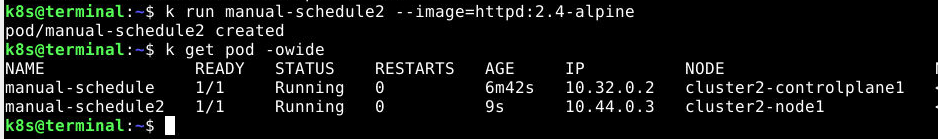

Start the kube-scheduler again and confirm it's running correctly by creating a second Pod named manual-schedule2 of image httpd:2.4-alpine and check if it's running on cluster2-node1.

译文:

用 **ssh cluster2-controlplane1*** 录控制板节点。暂时停止kube-scheduler,这意味着你可以在之后再次启动它。

创建一个名为 manual-schedule 的Pod,镜像为 httpd:2.4-alpine ,确认它已经创建,但没有在任何节点上调度。

现在你是调度员,拥有它的所有权力,在cluster2-controlplane1节点上手动调度这个Pod。确保它正在运行。

再次启动kube-scheduler,通过创建第二个Pod manual-schedule2 使用镜像 httpd:2.4-alpine ,并检查它是否在cluster2-node1上运行,确认它运行正常。

解答:

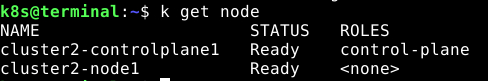

kubectl config use-context k8s-c2-AC检查并找出 controlplane 节点,

k get node

连接上远程节点并检查调度程序是否正在运行。

ssh cluster2-controlplane1

root@cluster2-controlplane1:~# kubectl -n kube-system get pod | grep schedule

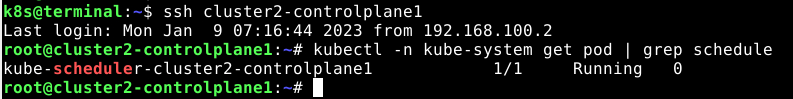

通过移出yaml文件结束调度pod

root@cluster2-controlplane1:~# mv /etc/kubernetes/manifests/kube-scheduler.yaml .

root@cluster2-controlplane1:~# pwd

root@cluster2-controlplane1:~# kubectl -n kube-system get pod | grep schedule

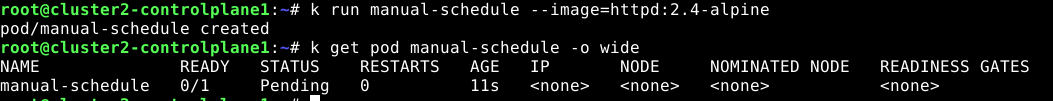

创建pod,并检查状态

k run manual-schedule --image=httpd:2.4-alpine

k get pod manual-schedule -o wide

编辑pod

k get pod manual-schedule -o yaml > 9.yaml9.yaml

# 9.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: "2020-09-04T15:51:02Z"

labels:

run: manual-schedule

managedFields:

...

manager: kubectl-run

operation: Update

time: "2020-09-04T15:51:02Z"

name: manual-schedule

namespace: default

resourceVersion: "3515"

selfLink: /api/v1/namespaces/default/pods/manual-schedule

uid: 8e9d2532-4779-4e63-b5af-feb82c74a935

spec:

nodeName: cluster2-controlplane1 # add the controlplane node name

containers:

- image: httpd:2.4-alpine

imagePullPolicy: IfNotPresent

name: manual-schedule

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-nxnc7

readOnly: true

dnsPolicy: ClusterFirst

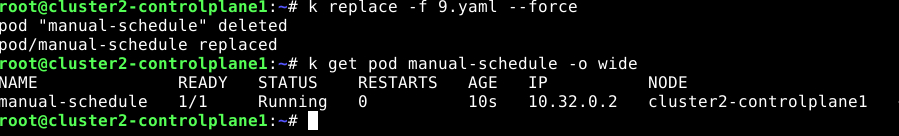

...由于我们不能用kubectl应用或kubectl编辑,在这种情况下,我们需要删除并创建或替换

k -f 9.yaml replace --force

k get pod manual-schedule -o wide

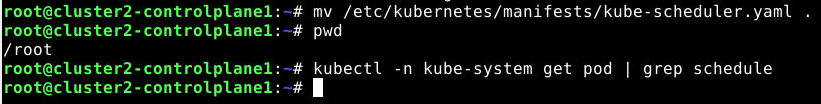

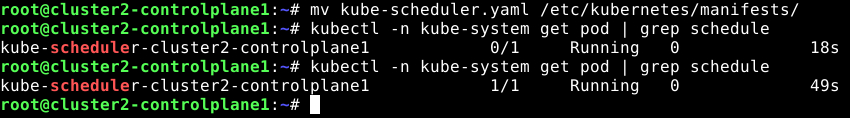

再次调度

ssh cluster2-controlplane1

root@cluster2-controlplane1:~# mv kube-scheduler.yaml /etc/kubernetes/manifests/

root@cluster2-controlplane1:~# kubectl -n kube-system get pod | grep schedule

root@cluster2-controlplane1:~# exit

k run manual-schedule2 --image=httpd:2.4-alpine