Use context: kubectl config use-context k8s-c1-H

Use Namespace project-tiger for the following. Create a Deployment named deploy-important with label id=very-important (the Pods should also have this label) and 3 replicas. It should contain two containers, the first named container1 with image nginx:1.17.6-alpine and the second one named container2 with image kubernetes/pause .

There should be only ever one Pod of that Deployment running on one worker node. We have two worker nodes: cluster1-node1 and cluster1-node2 . Because the Deployment has three replicas the result should be that on both nodes one Pod is running. The third Pod won't be scheduled, unless a new worker node will be added.

In a way we kind of simulate the behaviour of a DaemonSet here, but using a Deployment and a fixed number of replicas.

译文:

使用命名空间 project-tiger 进行以下工作。创建一个名为 deploy-important 的部署,标签 id=very-important(Pod也应该有这个标签)和3个副本。它应该包含两个容器,第一个名为 container1 ,镜像为 nginx:1.17.6-alpine ,第二个名为 container2 ,镜像为 kubernetes/pause 。

在一个工作节点上应该只有 一个 部署的Pod在运行。我们有两个工作节点: cluster1-node1 和 cluster1-node2 。因为该部署有三个副本,结果应该是两个节点上都有一个Pod在运行。第三个Pod不会被安排,除非有一个新的工作节点被添加。

在某种程度上,我们在这里模拟了DaemonSet的行为,但使用了一个部署和固定数量的副本

解答:

有两种方式,一种使用podAntiAffinity,一种使用topologySpreadConstraint

PodAntiAffinity

k -n project-tiger create deployment \

--image=nginx:1.17.6-alpine deploy-important $do > 12.yaml

vim 12.yaml12.vim

# 12.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

id: very-important # change

name: deploy-important

namespace: project-tiger # important

spec:

replicas: 3 # change

selector:

matchLabels:

id: very-important # change

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

id: very-important # change

spec:

containers:

- image: nginx:1.17.6-alpine

name: container1 # change

resources: {}

- image: kubernetes/pause # add

name: container2 # add

affinity: # add

podAntiAffinity: # add

requiredDuringSchedulingIgnoredDuringExecution: # add

- labelSelector: # add

matchExpressions: # add

- key: id # add

operator: In # add

values: # add

- very-important # add

topologyKey: kubernetes.io/hostname # add

status: {}TopologySpreadConstraints

# 12.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

id: very-important # change

name: deploy-important

namespace: project-tiger # important

spec:

replicas: 3 # change

selector:

matchLabels:

id: very-important # change

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

id: very-important # change

spec:

containers:

- image: nginx:1.17.6-alpine

name: container1 # change

resources: {}

- image: kubernetes/pause # add

name: container2 # add

topologySpreadConstraints: # add

- maxSkew: 1 # add

topologyKey: kubernetes.io/hostname # add

whenUnsatisfiable: DoNotSchedule # add

labelSelector: # add

matchLabels: # add

id: very-important # add

status: {}创建对应的deployment

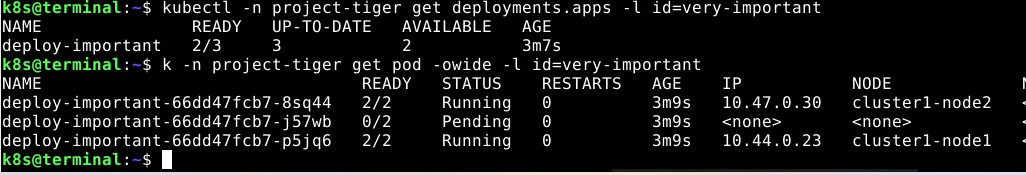

k -f 12.yaml create我们检查部署状态,它显示2/3的准备数量。其中有一个没有被调度

k -n project-tiger get deploy -l id=very-important

k -n project-tiger get pod -o wide -l id=very-important