Use context: kubectl config use-context k8s-c2-AC

Create a Pod named check-ip in Namespace default using image httpd:2.4.41-alpine. Expose it on port 80 as a ClusterIP Service named check-ip-service. Remember/output the IP of that Service.

Change the Service CIDR to 11.96.0.0/12 for the cluster.

Then create a second Service named check-ip-service2 pointing to the same Pod to check if your settings did take effect. Finally check if the IP of the first Service has changed.

译文:

在命名空间default创建一个名为 check-ip 的Pod, 镜像使用 httpd:2.4.41-alpine 。将其作为一个名为 check-ip-service 的ClusterIP服务暴露在80端口。记住/output该服务的IP。

为集群改变服务的CIDR为 11.96.0.0/12 。

然后创建第二个名为 check-ip-service2 的服务,指向同一个Pod,检查你的设置是否生效。最后检查第一个服务的IP是否有变化。

解答:

kubectl config use-context k8s-c2-AC创建一个pod,并且暴露端口

k run check-ip --image=httpd:2.4.41-alpine

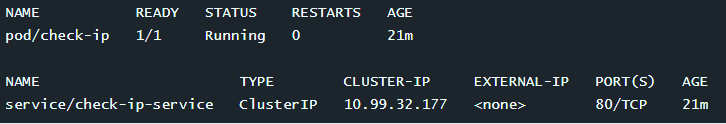

k expose pod check-ip --name check-ip-service --port 80检查pod和service的IP

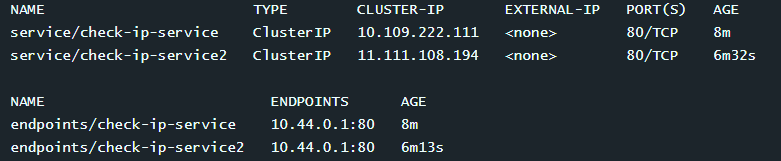

k get svc,ep -l run=check-ip更改service的CIDR

ssh cluster2-controlplane1

root@cluster2-controlplane1:~# vim /etc/kubernetes/manifests/kube-apiserver.yamlkube-apiserver.yaml

# /etc/kubernetes/manifests/kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=192.168.100.21

...

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-cluster-ip-range=11.96.0.0/12 # change

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key编辑调度器配置文件

root@cluster2-controlplane1:~# vim /etc/kubernetes/manifests/kube-controller-manager.yamlkube-controller-manager.yaml

# /etc/kubernetes/manifests/kube-controller-manager.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-controller-manager

tier: control-plane

name: kube-controller-manager

namespace: kube-system

spec:

containers:

- command:

- kube-controller-manager

- --allocate-node-cidrs=true

- --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

- --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

- --bind-address=127.0.0.1

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --cluster-cidr=10.244.0.0/16

- --cluster-name=kubernetes

- --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

- --cluster-signing-key-file=/etc/kubernetes/pki/ca.key

- --controllers=*,bootstrapsigner,tokencleaner

- --kubeconfig=/etc/kubernetes/controller-manager.conf

- --leader-elect=true

- --node-cidr-mask-size=24

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --root-ca-file=/etc/kubernetes/pki/ca.crt

- --service-account-private-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=11.96.0.0/12 # change

- --use-service-account-credentials=true使用crictl检查api-server和 调度器是否重启

root@cluster2-controlplane1:~# crictl ps | grep scheduler检查已存在的pod和service的IP,发现 没有发生变化

k get pod,svc -l run=check-ip

创建第二个pod,并检查,发现IP已经改变

k expose pod check-ip --name check-ip-service2 --port 80

k get svc,ep -l run=check-ip